Ovo je stara izmjena dokumenta!

Sadržaj

Automated spear phishing using machine learning

Abstract

–DO THIS LAST

How do you write an abstract? Identify your purpose. You're writing about a correlation between lack of lunches in schools and poor grades. … Explain the problem at hand. Abstracts state the “problem” behind your work. … Explain your methods. … (Source) Save your work regularly!!! Describe your results (informative abstract only). … Abstract should be no longer that 400 words.

Keywords: abstract; bastract; astract; retract; tract

Introduction

Phishing is a social engineering technique that attempts to obtain sensitive information (such as passwords, credit card details, …) from the target, typically using email spoofing or instant messaging on social media. The target is typically redirected to a fake website which looks like the original website and requires input of sensitive information. Another possibiliy is malicious software installation (ransomware, keylogger, spyware, …) on the target upon clicking on the malicious link.

Spear phishing is a targeted phishing attempt (directed at specific individuals or companies) which requires gathering data and profiling phishing targets. By gathering target's personal information and using it to gain the target's trust, it leads to an increased success rate compared to bulk phishing

Spear phishing has grown to be the predominant vector used to compromise an organization [3].

Social media sites such as Facebook, Twitter, and LinkedIn, because of their strong incentive to disclose personal data, can provide an adversary with a wealth of information on a target’s work interests and expertise. Compared to email, it can be argued that social media's culture makes phishing easier since getting tweets from strangers is more common than getting an unexpected email, and shortlinks are commonly used.

These natural weaknesses at scale are just waiting to be exploitet. How ? Well that's when machine learning can come into play.

Machine Learning (ML) and Artificial Intelligence (AI) have become essential to any effective cybersecurity and defense strategy against unknown fraud attacks including malware detection, intrusion detection and phishing detection [5] .

While machine learning has mostly been used in a defensive manner in the security community, machine learning can also be utilized as a weapon to perform malicious attacks by weaponizing social media.

Since inspecting and profiling targets is a critical and very time consuming measure which has to be taken in order to create a beliveable phishing message, automating this process could lead to more efficient spear phishing operating at a much larger scale with higher success rates.

Natural language processing is a subfield of AI that deals with raw unstructured text as a data source. It is particularly suitable for phishing because existing textual data can be used to identify the topics that the target is interested in and generate sentences which might be interesting to the target, and to which the target might respond.

In this seminar it will be discussed how threat actors can enhance the effectiveness of phishing attacks by using ML as a malicious tool for profiling the targets and generating phishing messages through an explanation of SNAP_R tool.

SNAP_R tool overview

The work and tool SNAP_R (Social network automated phishing with reconnaissance, or snapper)[7] presented at DEF CON and BlackHat, 2016 [6] uses Twitter for automated spear phishing. Twitter has a lot of benefits which make it a suitable platform for automated spear phishing:

- access to extensive personal data

- bot-friendly API

- colloquial syntax

- use of shortened url (can be used to obfuscate a phishing domain)

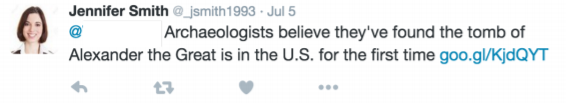

An example of a Twitter post :

Twitter profile example (taken from [6]):

Twitter posts are limited to 140 characters which reduces the probability of grammatical error compared to longer messages. All these points make it easier to generate more human-like messages and avoid suspicion.

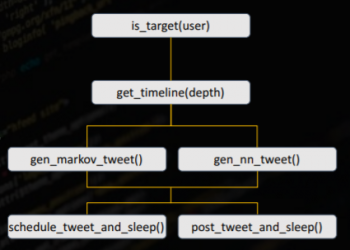

The workflow of the tool [6]:

The first step is determining whether a user is a valid target. High value targets are identified based on their level of social engagement (number of followers, retweets, …), posted personal information (job, popularity, …), account details and click-rates of IP-tracked links.

SNAP_R uses a recurrent neural network or a Markov model trained on spear phishing pen-testing data and tweets, which will be described in more detail in the model training section. The ML model is used to generate fishing posts which contain an embedded shortened phishing link and an @mention, targeting specific users.

The second step is timeline scraping of the target to a specified depth, obtaining information which will be used to generate a phishing post. (gen_markov_tweet(), gen_nn_tweet()).

The profiling of the users is done by extracting topics from the target's timeline posts and the users they retweet or follow. One ot the topics of the target's tweets and replies is used to seed the RNN (recurrent neural network) for the phishing tweet generation.

The most frequent words (excluding the stopwords - words like the, in, at, that, which, …) were the most effective way for seeding [6]. The phishing tweet is sent within the hour that the target is most active (schedule_tweet_and_sleep()) or immediately (post_tweet_and_sleep()). The hour that the target is the most active at is determined by simply counting the total number of tweets in each hour.

The posted tweet is seen only by the people who follow both the target and the bot generating the message. That means that if the bot doesn't have any followers, the only one who can see the message is the target, which is desireable.

An example of a machine generated tweet :

Additional things that are kept in mind are obeying the rate limit of Tweeter and posting non-phishing posts in order to build a beliveable profile and avoid detection. The authors also experimented with additional features such as the sentiment of the target's topics.

The tool and the techiques used to create it will be described in more detail in the following sections.

Automated target discovery

TODO

Repeat main points. kmeans++, silhouette score, rule based methods.

URL shortening

Other than keeping tweeter posts short, shortening the link also obfuscates the malicious link which the target might recongize, since there is a blacklist of known malicious links.

Not all shorteners allow shortening of malicious links, so [6] had to try out a number of them to find the one that is suitable. There are multiple options suitable, but goo.gl is used to shorten the malicious link, since it provides additional features.

Some of the extra features are the target's browser, target's operating system, target IP adress location (country), generating unique shortened links for the same url, …

No real malicious links were used during testing, the authors just measured the click-through rate.

Model training

Markov model

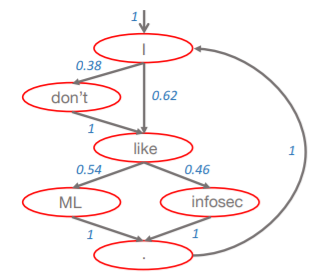

Markov model is a stohastic model used to model randomly changing systems, where future state depend only on the current state. The process is simple - transition probabilities (which are the probability of a word following the current word) are learned from the training set, which in this case were all posts from the target, by simply counting how many times the two words appear one after another. Counts are then normalized to obtain probabilities by simple normalizing (dividing) with the total cound for the given word.

For example if the training data has many instances of the phrase 'the cat in the hat' then if the model generates the word 'the' it will most likely generate 'cat' or 'hat' as the next word.

Example structure of a Markov model:

The next word is selecting using a 'fortune wheel selection', which means picking the next word with the corresponding probability. (For example in the picture, a random number from 0-1 is generated, if it's <0.38 'don't' is selected as the next word, otherwise 'like' is selected as the next word) This is done is such manner to avoid always generating the most probable text sequence. It's good to use words that started the sentence as the 'seed' (to start generating text) to avoid generating sentences like 'ate the cat' and similar.

Markov models are also agnostic to language, since they only use content on the target's timeline for training.

It's also possible to use a markov chain of a higher order, where the future state doesn't depend only on the current state, but also on previous states. This means that a 2nd order Markov model would look at the previous 2 words to predict the next word.

This is implemented using python's markovify library.

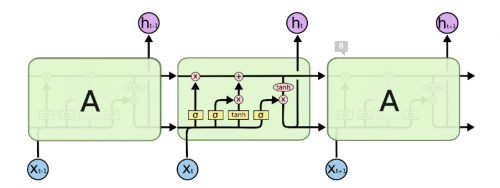

LSTM

LSTM (Long-short term memory) is a type of a recurrent neural network (RNN) which has feedback connections between units and is suitable for sequential data (like text senteces) and capable of learning long-term dependencies. This model has been very successfully applied to a variety of problems ranging from speech recognition and language modeling to machine translation, because language is naturally sequential and words that are far apart may still be related to one another.

LSTM is a repeating chain-like structure composed of LSTM units. An LSTM unit is composed of a cell and 3 gates - input, output and forget gate. The cell is used to remember values over time and the gates are used to control the information flow into and out of the cell.

The forget gate (lower left) is used to remove information from the cell state (top horizontal line). The input gate (middle) is used to update the cell state. The output gate (right) is used to filter the cell state and produce an output. Each of these gates has a matrix of weights (2 for input gate) which are learned using backpropagation since all functions used are differentiable. The loss used in optimization is (categorical) cross entropy loss. More details about how an LSTM works can be found in [12].

LSTM structure :

So how is an LSTM trained to generate words? First, it is neccessary to somehow represent the words to the LSTM.

An LSTM for text generation can operate on character level and on word level. In the case of character level mode, characters are represented using one hot encoding, and the correct output of the LSTM should be next character in the sequence. This approach could be generalized to using n-grams (n-character parts of the word) [14]. In the case of word level mode, words are also represented using one-hot encoding or word embeddings [13], while the correct output of the LSTM should be the next word in the sequence.

The text is generated by seeding the LSTM with a starting word, or a starting sequence of characters, and the output is comprised of ht's which are provided as input to the next cell of the LSTM chain. It is possible to stack multiple layers where ht's are inputs (xt's) to the next layer.

The authors train an LSTM (TODO character, n-gram or word level) comprised of 3 layers of about 500 units per layer (equal to the size of the hidden state ht) on Amazon EC2, using a dataset of 2M tweets (from @verified account comprised of tweets from verified users), which took about 5 days to train.

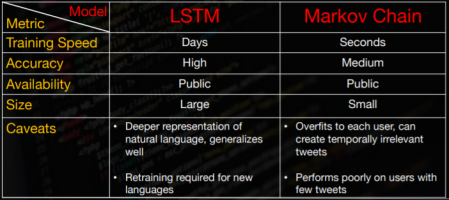

Comparison

The comparison show benefits and caveats of each of the model is shown in the next illustration taken from [6] :

Results

An example of a generated message that has been successful in phishing :

The success is measured by clickthrough rates on the shortened links in generated phishing messages.

The authors [6] reported that they achieved 17% clickthrough rates after 2 hours after posting 90 phishing posts (using #cat) and 30% clickthrough rate after 2 days. These results are significantly better than 5-14% reported in large-scale phishing campaigns [15] and comparable to reported 45% for large scale manual spear phishing [16]. The results are a consequence of social media risks and leveraging machine learning to target vulnerable users with a highly personalized message.

Comparison to a human

The authors [6] organized a competition between a human and SNAP_R tool. Over a two hour period they would target people with phishing posts, and whoever had the most clicks won.

The human was permitted to create as many Twitter characters as he/she wanted prior to the competition, and crafted pre-made tweets during the competition which he/she would copy and paste, tweak a bit and send to those posting the respective hashtags.

Copying and pasting turned out to be a problem, as Twitter stops users from posting the same message to frequently.

A single machine was operating and running the SNAP_R tool. SNAP_R sent phishing tweets to 819 usera at 6.85 tweets/minute, which resulted in 275 victims, a 33.6% sucess rate. The number of sent phishing tweets is arbitrarliy scalable with the number of machines.

The human managed to send 129 phishing tweets (with copying and pasting pre-made tweets) at 1.075 tweets/minute with total 49 clickthrought, a 38% sucess rate.

Conclusion

Sources

[1] https://en.wikipedia.org/wiki/Phishing

[2]M. C. Kotson and A. Schulz. Characterizing phishing threats with natural language processing

[3]B. Grow and M. Hosenball, “Special report: In cyberspy vs. cyberspy, china has the edge,” 2011

[4] Bahnsen, A.C.; Torroledo, I.; Camacho, L.D.; Villegas, S. DeepPhish: Simulating Malicious AI.

[7] SNAP_R

[8] Would you fall for this Twitter phishing attack?

[9] goo.gl

[10] Markov model

[12]LSTM

[13] word embeddings

[14] n-grams

[16] Bursztein, Elie, et al. "Handcrafted fraud and extortion: Manual account hijacking in the wild."